How Reliable Is Our Ethical Behavior?

Morality and ethical behavior is foundational to human cooperation and success. But moral action can be distorted by misinformation, emotions, and the influence of others.

The content of this section, unless indicated, represents Robert Ornstein’s award-winning Psychology of Evolution Trilogy (God 4.0, The Evolution of Consciousness and The Psychology of Consciousness) and Multimind. It is reproduced here by kind permission of the Estate of Robert Ornstein.

The rise of social media has allowed misinformation about everything from politics to health, history to science to spread at unprecedented speed, raising concerns about the difficulty of distinguishing fact from fiction. We are challenged by “fake news” that “could have been true.”

Even when people initially recognize information as false, because it’s so often repeated, they may later mistake their familiarity with it as a sign of its truthfulness.

Ethical behavior is based on information filtered through our beliefs and ability to separate true from false. Even when people initially recognize information as false, because it’s so often repeated, they may later mistake their familiarity with it as a sign of its truthfulness. But that’s not all. It turns out that mere exposure to misinformation, perhaps especially in the form of vivid narratives such as “fake news” stories, may encourage people to imagine how it could have been true. Once we do this, studies show that we may be more inclined to let people off the hook for asserting that it is true, especially if our beliefs align with theirs.

Daniel A. Effron from the London Business School ran experiments clearly demonstrating that when a falsehood is told that aligns with our political preferences in a way that enables us to reflect on how it could have been true, we tend to judge the falsehood as less unethical to tell, and to judge the politician who told it as having a more moral character and deserving less punishment than when an equivalent falsehood is told by a politician who does not align with our political preferences. Similar results emerged independently in three studies, regardless of whether participants considered the same falsehoods or generated their own.

For example, Effron points out that to defend the false claim that more people attended Donald Trump’s inauguration in 2017 than Barack Obama’s, spokespeople proposed the notion that Trump’s crowd would have been larger if the weather had been better. Or some people might justify Hillary Clinton’s false claim that no Trump-brand products are made in the United States by imagining they would have been made abroad if it had been cheaper to do so. These conditional propositions—if circumstances had been different, then an event would have occurred—are called counterfactuals. Logically, they do not render falsehoods true, but psychologically, they may make falsehoods seem less unethical.

It turns out that our moral judgments depend not only on what actually is true but on what feels true to us. We are more likely to lie about achieving a goal if we almost did so, than if we were nowhere close to doing so. We judge future events as more likely to occur when we imagine them, so leaders can reduce the negative consequences of telling a falsehood merely by convincing their supporters it could have been true.

For example, participants were more likely to lie about achieving a prizewinning die-roll when their actual roll was numerically close to the winning number. It seems participants felt it was less unethical to tell when the lie involved misreporting their roll by a small margin than a large margin.

Regardless of political views, participants condemned falsehoods. However, falsehoods supporting their views received less condemnation, particularly if they were counterfactuals—they could be true.

Supporters of a particular point of view, person or cause may not ignore facts, but may readily excuse a falsehood for which there is a justification, even a weak one. We should thus be wary of our ability to imagine alternatives to reality. When leaders we support encourage us to consider how their lies could have been true, we may hold them to laxer ethical standards.

Extending this further, it helps to explain why people believe cults/religious myths are literally true: they are aligned with beliefs and ideologies that as believers they are motivated to defend; they are predisposed to believe counterfactual stories, told as the truth, which are repeated and repeatedly imagined.

When Being Good Frees Us to Be Bad

We are morally inconsistent in at least one other way as well. Studies show that past good deeds tend to liberate individuals to engage in behaviors that are immoral, unethical, or otherwise problematic—behaviors they would otherwise avoid for fear of feeling or appearing immoral. For example, individuals whose past good deeds are fresh in their mind often feel less compelled to give to charity than individuals without such comforting recollections.

Even recounting moral stories about oneself or engaging in moral behavior has the power to license immoral actions. Merely imagining helping others can have the same effect. In 2006 Professors Uzma Khan from Carnegie Mellon and Ravi Dhar from Yale paid participants $2 for a study in which they imagined performing various activities and then were asked if they would donate any of their payment to a charity. Participants who imagined agreeing to help another student for a few hours donated less at the end of the experiment than people in the control condition who did not imagine doing anything generous. The conclusion is that because we view our own intentions as more indicative of our identity than we do our behavior, we allow ourselves to license less-than-exemplary behavior.

In summary, thinking about one’s past moral behavior or merely expressing one’s generous intentions can license people to behave more selfishly than they would otherwise allow themselves to behave.

When Emotions Overtake Morality

It’s important to realize that emotions can override moral actions, particularly when they are activated in a group situation. As individuals, once we adopt another’s perspective, we are more likely to favor that person over others; we might favor a suffering girl whose story we know ahead of everyone else waiting for care, rather than adhere to objective and fair procedures. Psychologist Paul Bloom suggests, therefore, that part of being a good person involves being able to override one’s compassion where necessary, rather than cultivate it.

We empathize most with those around us. Consequently, in a group situation, the beliefs and biases of the group tend to influence our behavior, often in ways that as an individual we might abhor. As Bloom notes: “for every in-group there is an out-group, and that’s where the trouble lies. We would have no Holocaust without the Jews and Germans; no Rwandan massacre without the Tutsis and Hutus.” Since we are evolutionarily biased to belong in a group, we tend to create groups based on any number of reasons: age, sex, race, religion, or ethnicity among them; and sometimes for very trivial reasons like the color of a T-shirt. But even then our behavior within such groups can become extreme—as we know from the news—and you can be killed because your T-shirt is the “wrong” color.

The Power of the Situation

In spring 1967, in Palo Alto, California, history teacher Ron Jones conducted an experiment with his class of 15-year-olds to sample the experience of the attraction and rise of the Nazis in Germany before World War II. In a matter of days the experiment began to get out of control, as those attracted to the movement became aggressive zealots and the rigid rules invited confusion and chaos, as the film based on the event shows.

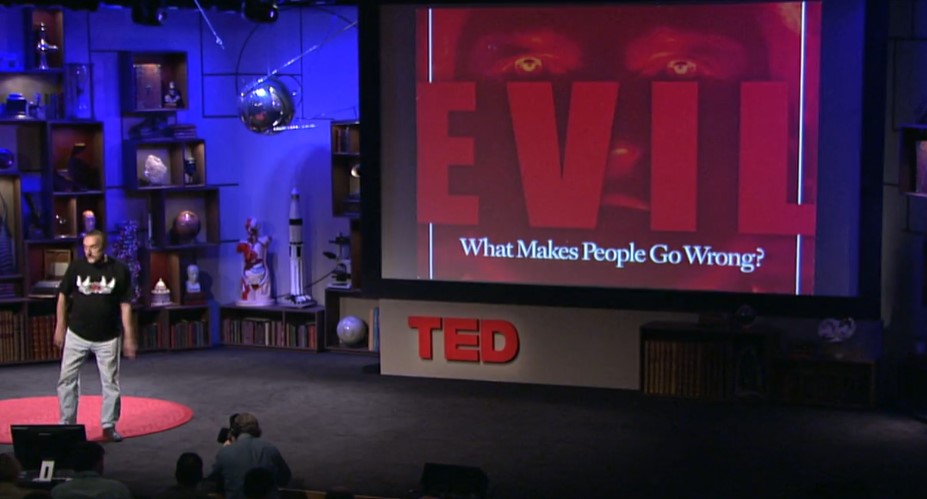

We are endowed with a flexibility of mind that has enabled us not only to survive anywhere in the world, but to move beyond our inheritance. At the same time, as we have seen throughout our violent history, in a group situation, this same ability to adapt can quickly distort our innate morality and lead us to reprehensible behavior. As social psychologists have shown in a number of studies—especially in the work of Drs. Phil Zimbardo and Stanley Milgram—we are particularly vulnerable if the situation is institutionalized. Described as a celebration of the human mind’s capacity to make any of us kind or cruel, caring or indifferent, creative or destructive, villains or heroes, the TED talk by Zimbardo graphically demonstrates this tendency, some of its recent consequences, its implications and solutions.

Philip Zimbardo’s TED Talk on the psychology of evil.

The power of the situation is revealed in many aspects of our society. Studies suggest that behavior is often influenced by factors that have less to do with altruistic and egalitarian motives and more to do with looking altruistic and egalitarian. In experiments or games where generosity is tested, the more observable one’s act is, the more one gives. Even pictures of eyes on a wall or computer screen make people kinder, behave more honorably.

This idea of “the other” watching or knowing what we do and think has been with us since at least Paleolithic times. It is inherent in the way we see the world: in our three-tiered consciousness. Upon this fundamental scaffold and paired with our inherent characteristics, religions, cultures and civilizations were built and their ideas transmitted. Replacing our sense of “the other” with a consistent internal “observer self” helps to overcome manipulative forces whether consciously or unconsciously applied.